How to Calculate Releases

How to Calculate Releases

TL;DR

- Once modern software projects are underway, realistic completion dates or release content can be predicted by analyzing the data contained in the issue tracker.

- Many organizations still fail to do this correctly and are caught off guard when they suddenly find themselves over time and over budget.

- The key is to realize, that much or often most of the remaining work is usually not just unestimated. It is not even known.

- Our method and tools provide you with calculations for all of these unknowns.

- While individual work items are subject to judgment and variation, the statistical law of large numbers makes it sound for the total work.

- You can use this to predict the end date in a fixed scope process or, vice versa, the delivered scope in a fixed date process.

Unnecessary surprises

Many common approaches attempt to assess whether a running development project is still on track. Financial project controlling for example compares plan versus actual spending, velocities are calculated, burn-down and Gantt charts are drawn. And still, shortly before what was thought to be the end date, companies are often surprised that the project requires much more time and budget and/or will only complete a fraction of the intended scope. This article explains the reasons and presents a method to get a reliable quantitative grip on all these uncertainties and unknowns.

The insights will be applicable regardless of the process or method used. We both show how to calculate the release date for a fixed scope (such as in a fixed price project) or to determine the set of done features until a timeboxed release date (e.g. in a continuous product development). For the discussion, we use the most commonly used terms and vocabulary from present-day agile project management, which can easily be translated to the terminology employed in your local process (on the scaled level e.g. SAFe, Nexus, Less, or custom).

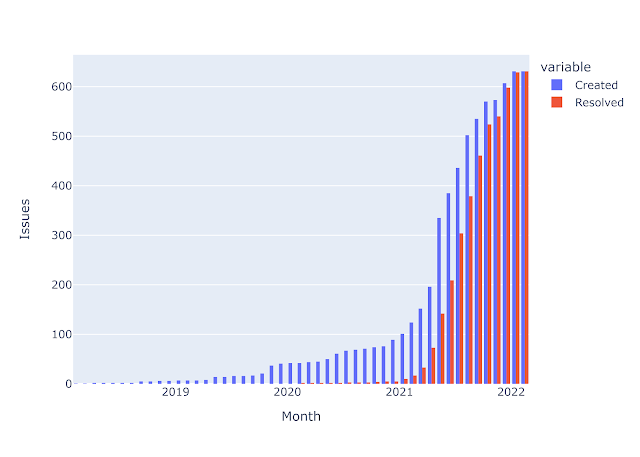

Total remaining work

Nowadays, project work is usually managed by keeping a backlog1 of issues in a tracker, such as Jira, OpenProject, Redmine or the corresponding functionality of GitHub, GitLab, etc. An issue (or work item or backlog item) can be a requirement of different granularity (story, feature, epic, task etc.) or it can be a bug. The following screen shot shows a small excerpt of such a backlog, as you probably know it from your projects. The complete project (Apache Spark as an example with publicly available information) contains at the time of writing a total of 44,000 issues, of which 2,600 are open and 400 new issues are created every month.

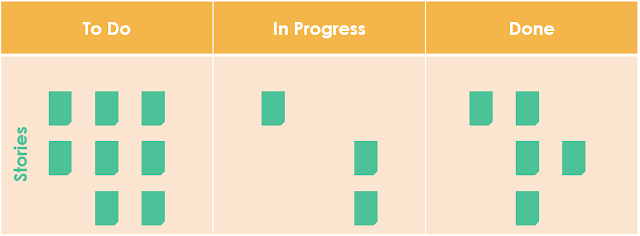

To illustrate the following explanations, we visualize the product backlog in form of a Kanban or Scrum board. In its basic form, it has issues already done, currently in progress and still to do. To simplify the discussion for a start, we first focus on stories and discuss further issue types later. Remember, that each field can in practice contain thousands of issues.

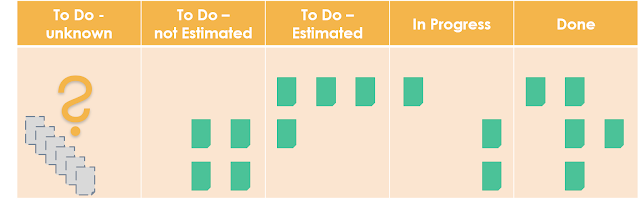

Unfortunately though, this picture is incomplete and neglects a large part of the whole remaining work. In projects of serious size, not all stories are known from the beginning. This is one of the main reasons to develop agile instead in sequential phases (“waterfall”). (Funny enough, it seems to be a common pitfall to act and plan as if agile would prevent this from happening, instead of just providing better means to deal with it.) So far, our board only covers issues, which are already known at the current point of time. To get the complete work, we need to include those, which will be discovered later in the project.

Furthermore, the already known issues are often not all estimated. Un-estimated issues may not be forgotten and have to be accounted for appropriately.

Unknown and un-estimated work is usually the main reason why predictions fall short and are too optimistic by factors. Even, if a project applies newer methods for progress tracking, such as sprint velocity, unknown issues are often disregarded or only represented by a small buffer. Known but not yet estimated issues are easily neglected as well. In consequence, the bulk of the work becomes effectively ignored or heavily underestimated. And if there is some awareness on the operative level, it can still get lost on the way up the reporting chain.

In the following picture, we split the todo column into estimated and un-estimated, and extend the board with unknown todo issues.

Calculating remaining effort

To project a realistic release date or content, we first need the total effort of all stories - whether already known or not. Some computations can be based on different parameters and with a different degree of detail. In an agile and iterative manner, we first provide an initial base line calculation, which can be quickly applied and already gives reasonable results. After that, we iteratively refine further details of the calculation and increase its precision.

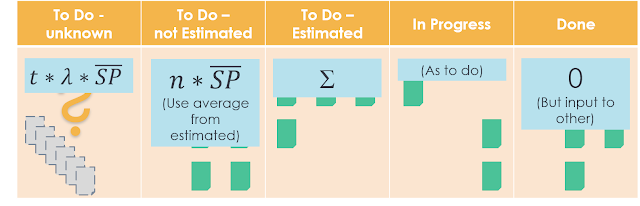

Done issues: Done issues do not require any immediate effort by definition. Nevertheless, their efforts and estimations still have to be tracked, because these are input to other calculations below.

Issues in progress: We can subsume items in progress (e.g. planned for the currently running sprint) to those still to do. This simplification correctly reflects the status directly after a sprint, e.g. when a snapshot of the backlog is pulled from the tracker after the sprint review or during the time of the sprint planning.

Estimated stories: If you are using story points for estimation, these can simply be added up. If the team instead estimates in man days, you have to distinguish between estimated (a-priori un-adjusted) and actual (a-posteriori adjusted) man days and to use the former estimated here. (For better transparency, it is a good practice to always refer to upfront estimations as story points, even if the estimation was done in units of man days.)

Un-estimated stories: Calculate the average story size of all so far estimated stories (both done and not done). (Use the original estimation for done stories as well. The meanwhile known actual effort of the done stories will instead be reflected in the velocity.) This provides a proxy estimation for all non estimated stories.

Yet unknown stories: Now comes the interesting part - to estimate stories we have to expect in future but do not even know yet. You can approach this based on different information and parameters. We start with a simple base line calculation using the average discovery rate (or arrival rate ) of new stories per sprint, which can be deduced from the creation dates in the tracker. At a first glance, this seems overly conservative. Nevertheless, it matches the observation very often, except at the very beginning. Possible enhancements are given in the refinement below. The new work, that has to be expected is then:2

is the time to release (e.g. number of sprints). For a fixed release date, is obviously given. Its computation for a fixed scope is shown in the next section. The total remaining effort is of course simply:

The previous effort calculations are summarized in the following figure:

Release date

We now have first formulas for the remaining effort. To calculate the remaining time, we define the average progress as the velocity3 reduced by the discovery rate. It measures, how fast the known scope is reduced:

The number of remaining sprints , until the known scope is implemented and its size reaches zero, is then:4

Continuously discovering functionality half the size of the just implemented (discovery is half of velocity) will for example double the required time as expected. It is nevertheless easily ignored and might be a further reason for heavy overruns in many projects. Obviously, if the new story points exceeded the implemented, the project would never finish. (This may be a frequent phenomenon at the very beginning of the project and then harmless - see Trend Analysis below for how to handle this case.)

Scope done until a fixed date

If your process has a fixed release date, the scope inevitably becomes variable in real live. The sum of released story points can equally be calculated as number of remaining sprints times velocity:

Beware, that this set of shipped stories will be composed of both already known and discovered in future. So, how much of the currently known scope will be part of the release then?

In the worst case, all future stories will have higher priority than the already known, pushing these “down” in the backlog. The released known scope will be the highest prioritized after subtracting capacity consumed by new scope:

Assuming average priorities for upcoming scope, we can complete a corresponding fraction of known scope:

2nd Iteration: Adding precision

Now we have a simple way to produce the first projection which will already reduce the error significantly in most cases. Nevertheless, there are several ways to get more accurate.

A closer look on bugs

Following agile tradition, we so far focused solely on functionality in form of stories with bug fixing implicitly included. This provides good results, if the number of bugs in relation to stories remains constant. Unfortunately, this is often not the case. For a variety of reasons, bugs tend to increase towards a release. Thus, we need to treat them extra.

Un-estimated bugs: For the un-estimated bugs, calculate and sum up the average bug size the same way as for stories. If your project does not estimate bugs at all, get estimates for a small representative set of bug issues and derive average story points from these. An even better option is to use the past percentage of time spent on bug fixing versus story implementation, either from time tracking or some representative a posteriori developer estimations. Together with the number of both done stories and done bugs, this provides you an average bug size.

Yet unknown bugs: There are many ways to project future errors. In software, they are strongly correlated with the amount of implemented functionality, so in a simplified view, bugs are “created” while implementing stories. This corresponds to an average ratio of bugs (failure rate) per done story resp. corresponding story points. This is determined in the same manner:

Together with the total number of remaining stories (both known and unknown) and the average bug size, it gives you the remaining bugs to be expected:

We now only have to adapt above formulas to include bug fixing efforts, for example:

The same refinement has to be applied for remaining and total scope etc. In other respects, the calculations remain the same.

Differentiate for teams or sub-projects

In larger projects, some of above parameters may be different across sub-projects or teams. An important example would be teams using different baselines for story point estimations. Depending on the size of your project, you can either do team specific calculations and consolidate the results, or you can introduce adjustment factors. For example, average bug sizes and frequencies typically differ between front and back end or between customer adaptation and core development.

Adjust velocity according to capacity

Velocity is not constant. There are vacation times, varying sick days and growing or shrinking teams. The easiest way to account for these, is to document the capacity of relevant FTE (full time equivalents) for the past and forecast these for the upcoming sprints applying simple cross-multiplication. Just make sure to avoid the common “Mythical Man-Month trap”5: People added from outside or different products need a significant ramp up time until they become productive and in the beginning even draw capacity from already present team members.

Trend analysis

Some of the parameters (e.g. newly discovered stories, average story size, bugs per story) change over time. If you extrapolate their future trends, you can place the resulting future values into the calculations. There exist many statistical methods for this, including easy ones. In a first step however, it is a good idea to simply create visual plots of the historical values. This allows you to spot relevant changes and to make sense of potential outliers.

Even more fine-tuning

Several additional computational steps can further improve the results. Again, in an agile manner, it is advisable to consider and implement first those that will provide the greatest improvement for your specific project(s).

Differentiate un-estimated issues

You can further refine the calculation of average issue sizes, by differentiating story priorities respectively bug criticalities. In our experience especially bugs with higher criticality tend to require more effort on average.

Further sources for story discovery

So far, we used arrival rate of newly discovered stories and applied a trend analysis. The trend profile can be adjusted with further input:

- The profile curve of former releases and/or related products, if such data is available.

- The effort spent and planned by the requirements teams (product management, PO, etc.), f.e. their FTE percentage on this vs. other releases or projects.

- If issues are developed from precursory work (e.g. documents accompanying a contract), an estimate can be drawn from the progress representing it as issues in the backlog.

Differentiate unknown bugs

Bugs are not only detected by developers, but also by other teams or by the customers. If for example testing, QA or system integration is done in other teams, it is worth to calculate specific discovery rates for these different sources. Quantifying parameters are e.g.

- FTE or man days of QA testing,

- Number of customers or users in production.

Furthermore, fixing bugs can unfortunately create new ones. This is already implicit in the previous calculation, but will be affected if the ratio of story to bug development changes, e.g. towards the end of the release. To account for this, separate bug ratios can be used. How to acquire information on the bug source depends on your project, e.g. a combination of the creator and the creation time of the issue or, if maintained, reference links.

Further process stages

Backlog items can have a status between or beyond “open”, “in progress” and “closed”. The Jira default configuration for example defines 25 states including “In Review”, “Under Review”, “Accepted”, etc.6 Check, which statuses are used by your projects individual process and which of these have to be counted into the beforementioned calculations. For example, number and size of issues “in test” would usually be a parameter for yet unknown bugs to be expected.

Further issue types

Larger projects usually use additional issues types, such as epics, (sub-)tasks, themes, features (e.g. in SAFe) and alike. Go through each of the types used in the backlog and determine how these should be accounted for. For example: Are epics estimated on their own and how is this estimation synchronized with the issues it is composed of? Can an expected number of stories be determined for an epic? Can (sub-)tasks provide a more detailed remaining effort for stories?

Risks obviously affect the project’s outcome as well. If these are many, small and equally distributed along the course of the project, they are already implicitly included. Otherwise they can in the same way be added as new issue type and rated with probability times impact. (This may require to add a conversion or estimation of the impact in your units of effort.)

Other effort types

Large-scale developments often require efforts that are not included in standard sprint cycles, such as end-user testing, training, etc. Their size and dates depend on the results from above and can be calculated correspondingly. This may correspond to different roles in the organization, such as product management, test & QA, etc.

Apply even more intelligence

And of course, as most readers will be wondering, more advanced machine learning algorithms beyond standard statistics can be employed in several places:

- Determine typical trend profiles by analyzing the data from a large number of projects.

- Another obvious application is the use of LLMs (Large Language Models) to replace statistical averages with automatic estimates from user story texts and bug descriptions.

However, the details are still under investigation and require access to large training sets from further real-world projects.

Further topics and considerations

- A project in a tracker usually spans multiple releases and often multiple products. For which of those a specific issue is planned can be indicated by labels, special columns, comments or notes. Sometimes it is even only implied indirectly, e.g. by the priority. Identifying and filtering issues that apply to the calculated release is one of the most common preparation steps.

- Bugs can be similarly assigned to a certain release, but there might as well be an abstract acceptance criteria such as a maximum criticality or an allowed number of bugs per criticality. This has to be reflected in the corresponding part of remaining work.

- To compute the averages, you have to select a representative time slice. It usually a good idea to skip the very beginning of the project, when some parameters are still settling in. Alternatively you can use trend analysis right from the start, which will give you the final parameters right away.

- If you have no or only very few estimates, you can compromise by using only the number of issues. In a second iteration, you should then try to derive different weights for different issue types (e.g. stories vs. bugs) to use these as proxy estimates.

- If you do not have sprints or fixed time iterations, all of the calculations above can easily be applied to calendar days or months instead.

Comparison to traditional methods

Plan/actual comparisons

Project controlling which only compares spent versus planned budget per period (e.g. the current month) neglects the actual progress. Consequently, it can only detect deviations from the spending process, but not future budget overruns. It still remains important to image the projects FTE and MD to financial numbers.

Built in reporting

As the reporting capabilities of the major issue trackers are very limited, especially when it comes to calculations, users can be tempted to apply what is available. This usually omits yet unknown or uncertain issues and thus a major part of the remaining work.

Critical path

Classical project management methods such as critical path or critical chain fail for most modern software projects, as they can solely handle known issues (or tasks). They sometimes remain valuable for specific questions to discover time dependent shortages on particular skills or resources, for example in penetration or integration testing.

Burn down charts

Burn down charts7 as frequently applied in agile context can provide good projections especially for single team projects. For these they could visually indicate similar results as our quick 1st iteration above. But they have several restrictions:

- They have to be correctly computed and the results habe to be correctly interpreted, which is unfortunately still far from being a given. For example, the representation of newly discovered scope is a frequent source of misunderstandings and can lull into a false sense of safety.

- Even if computed correctly, they are often misunderstood by recipients not deeply familiar with the matter. This may be one of the reasons, why they are only briefly mentioned in newer versions of the Scrum guide, but not any more described in detail.

- Several parameters (capacity, velocity, discovery rate, bug rate, etc.) are assumed to be constant over the whole time. As discussed above, this is unrealistic in most large and longer lasting projects.

It is still helpful to add a complementary visualization to facilitate discussions. For the mentioned reasons, I recommend burn down charts only for sprint backlogs with relatively fixed scope. The better option for release backlogs are instead burn up charts, which look more complicated at first glance, but are less prone to misinterpretation. They can be automatically plotted from the data used above, and can even be extended to include more details, such as intermediate states or discovery rate.

Practical implementation

The built-in reporting capabilities of most issue trackers are limited in practice, especially when it comes to calculations. For a first quick result, you can of course export the backlog and do some calculations in a spreadsheet. For regular updates or larger projects with issues in the thousands though, this approach becomes impractical:

- Excel (or alike) becomes too sluggish to work with, and manual editing is highly error-prone.

- Data exports may be unexpectedly complicated. Jira for example has a fixed limit for exports of 1000 issues, so multiple export runs would have to be correctly combined. Furthermore new or updated issues may change the set of columns.

- More advanced calculations quickly convolute the tables.

- Usually some data cleaning is needed. For example different teams may follow different conventions, data can be missing or needs to extracted from labels, notes or external sources. Most of this has to be repeated for every update.

Our tools are implemented on top of data science libraries that allow for simultaneous processing of large data sets. The information is gathered directly from the issue trackers, mostly via their APIs. Updates will then only require a re-run. Spreadsheets and BI dashboards can still be useful to present and distribute the readily calculated results.

Summary

The presented calculation gives good results for most software development projects using modern project management means. It is based on analyzing a backlog containing issues, which are individually still based on estimations and decisions. But because we are summing and extrapolating over many of those, we get in the statistical range of the law of large numbers. The calculation is even designed in a way, that accounts for systematic errors and mis-estimations, as long as their magnitude does not undergo a sudden change.

Realistic forecasts are the tools to make the right decisions. It’s in the nature of the case, that this can mean bad news. But keep in mind, that uncomfortable consequences are always easier to deal with as early as possible. Otherwise, decisions get postponed to a future point, when the necessary actions become much more expensive.

The remaining uncertainties get confined to a range that doesn’t impose escalations and can be managed on the operational level, which makes it a real game changer. The automatic implementation provides data at low update cost in real time. Necessary measures can be discussed and implemented much earlier reducing wasted efforts and costs.

Feel free to contact me with any questions via oliver.ciupke@gmail.com or LinkedIn. Please include the topic “Release Calculation” in the subject or invite message.

How to Calculate Releases by

Oliver

Ciupke is licensed under

CC

BY-SA

4.0

Comments